Subscribe to blog updates via email »

A/A Testing: How I increased conversions 300% by doing absolutely nothing

There are few things wantrepreneurs (all due respect, I’m a recovering wantrepreneur myself) love to talk about more than running A/B tests.

The belief seems to be that if they just keep testing, they will find the answer, and build the business of their dreams.

Most of them are wrong. Many of their businesses would be better off if they didn’t run any A/B tests at all.

WANT TO WRITE A BOOK?

Download your FREE copy of How to Write a Book »

(for a limited time)

If you’re going to test, you should try A/A testing

At the very least, many of these wantrepreneurs would be doing themselves a favor if they would run A/A tests.

In an A/A test, you run a test using the exact same options for both “variants” in your test.

That’s right, there’s no difference between “A” and “B” in an A/A test. It sounds stupid, until you see the “results.”

I ran nothing but A/A tests for 8 months. And you won’t believe what are you still reading this subhead?

From June through January, I ran nothing but A/A tests in the emails I sent through MailChimp. 56 different campaigns, comprising more than 750,000 total emails, and I didn’t even test a subject line. (I’ve since switched from MailChimp to ActiveCampaign.)

That didn’t stop me from having a six-figure year in my fully-bootstrapped, solopreneur business.

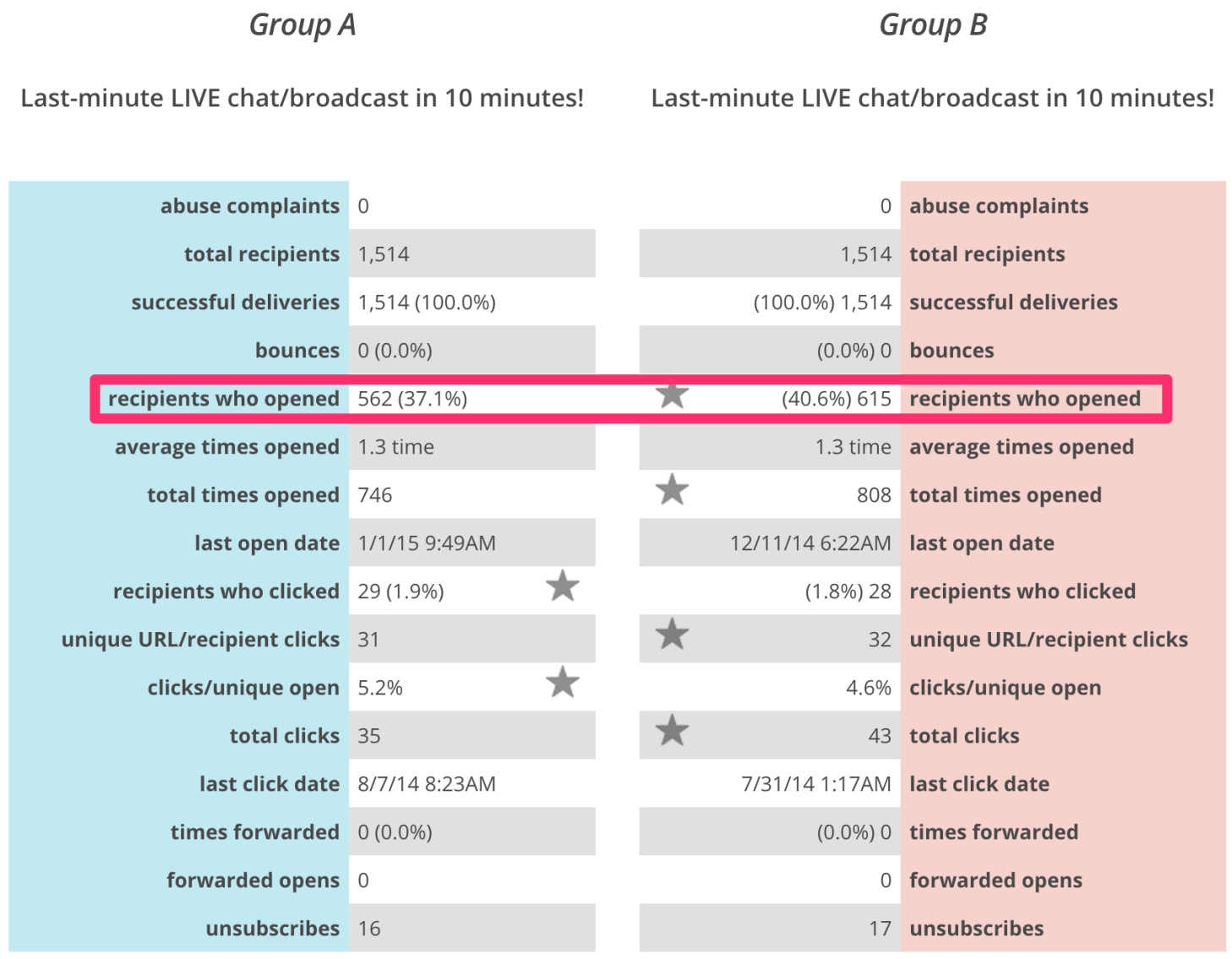

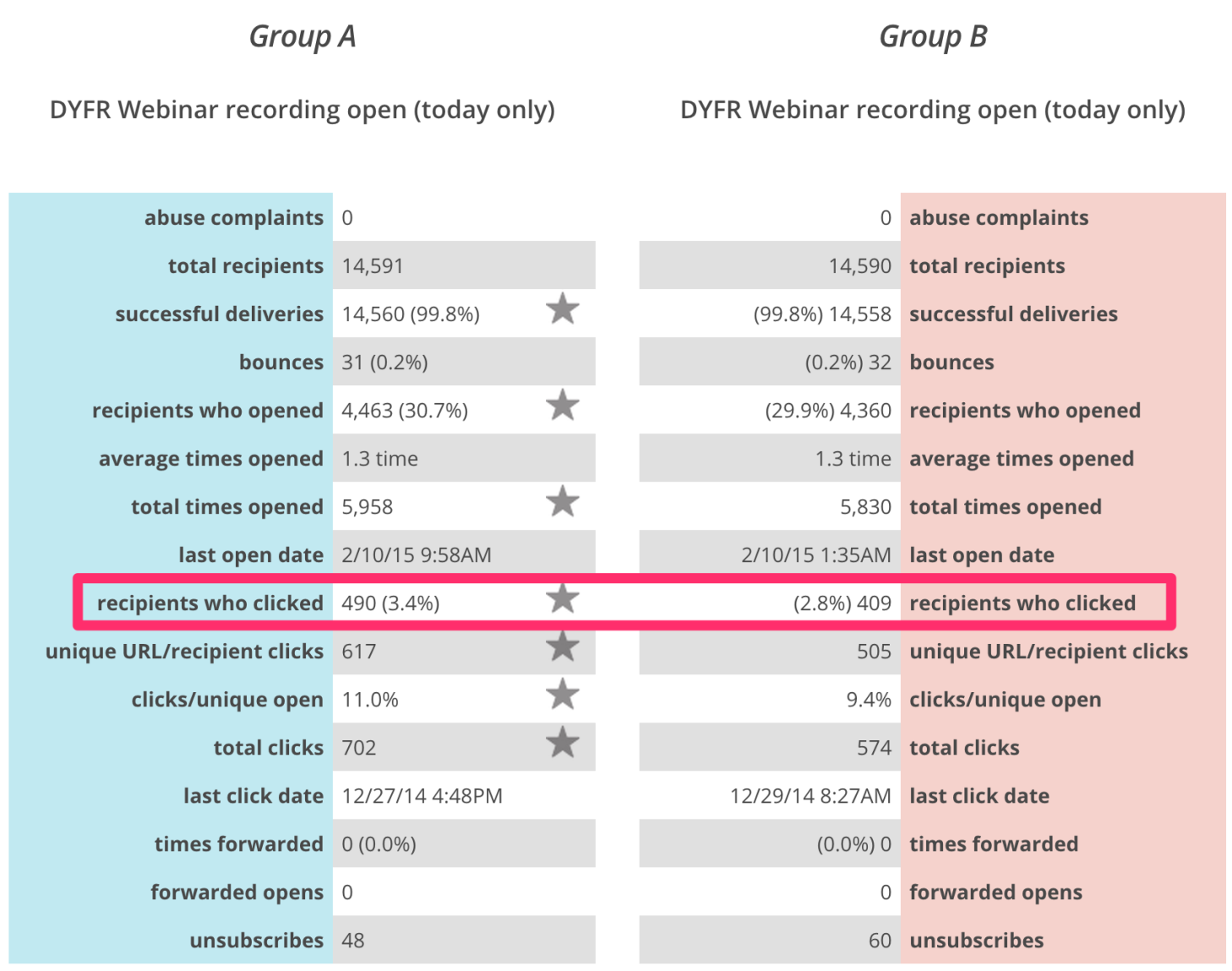

During that time, I came across “results” like this.

I get a kick out of A/B testing the *exact same* email & finding statistically different performance pic.twitter.com/T6mR8beDnS

— ? David Kadavy (@kadavy) May 30, 2014

To many wantrepreneurs (my former self included), this looks like “oh wow, you increased opens by 10%!” They may even punch it into Visual Website Optimizer’s significance calculator and see that p=.048. “It’s statistically significant!” they (or I) might exclaim.

In fact, when I shared results of A/A tests like this, many people refused to believe me. They’d say things like:

“How are the emails different?” (They’re not.)

“Were they sent at different times?” (No.)

“What did you change?” (Nothing.)

IT’S AN A/A TEST! It’s the exact same email, sent at the exact same time, using whatever technology MailChimp uses to send A/B tests.

“Ah, but what about MailChimp’s technology?” Well, you could call that into question, but consider this…

…to a trained statistician, there is nothing remarkable about these “results.” Given the baseline conversion rate on opens, the sample size simply isn’t large enough to get a reliable result. What’s happening here is just the silly tricks our feeble human minds play on us when we try to measure things.

Even if you do have a large enough sample size, you’re bound to get the occasional “false positive” or “false negative.” Meaning that you could make the completely wrong decision based upon false information.

The amazing “results” I got on my A/A tests

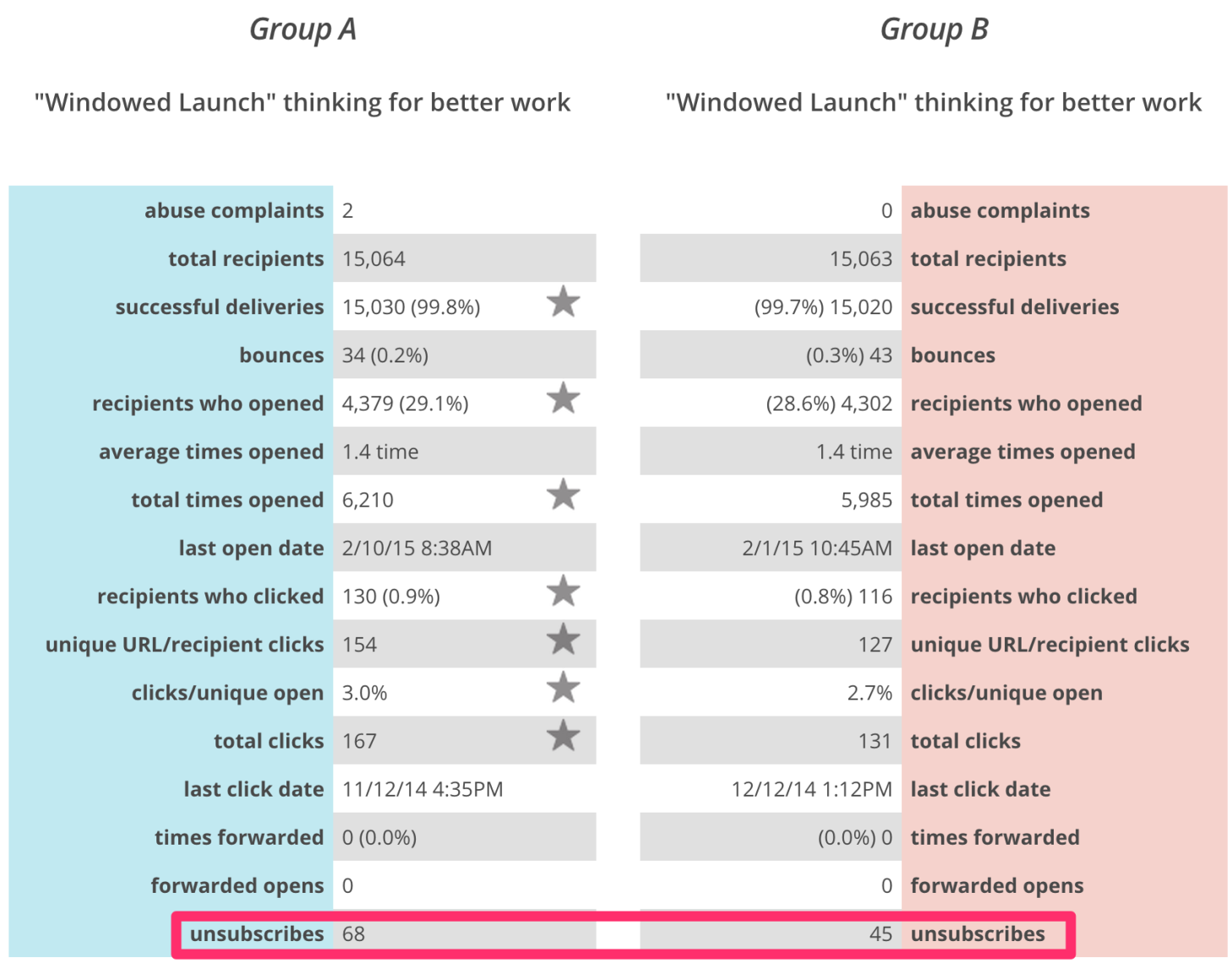

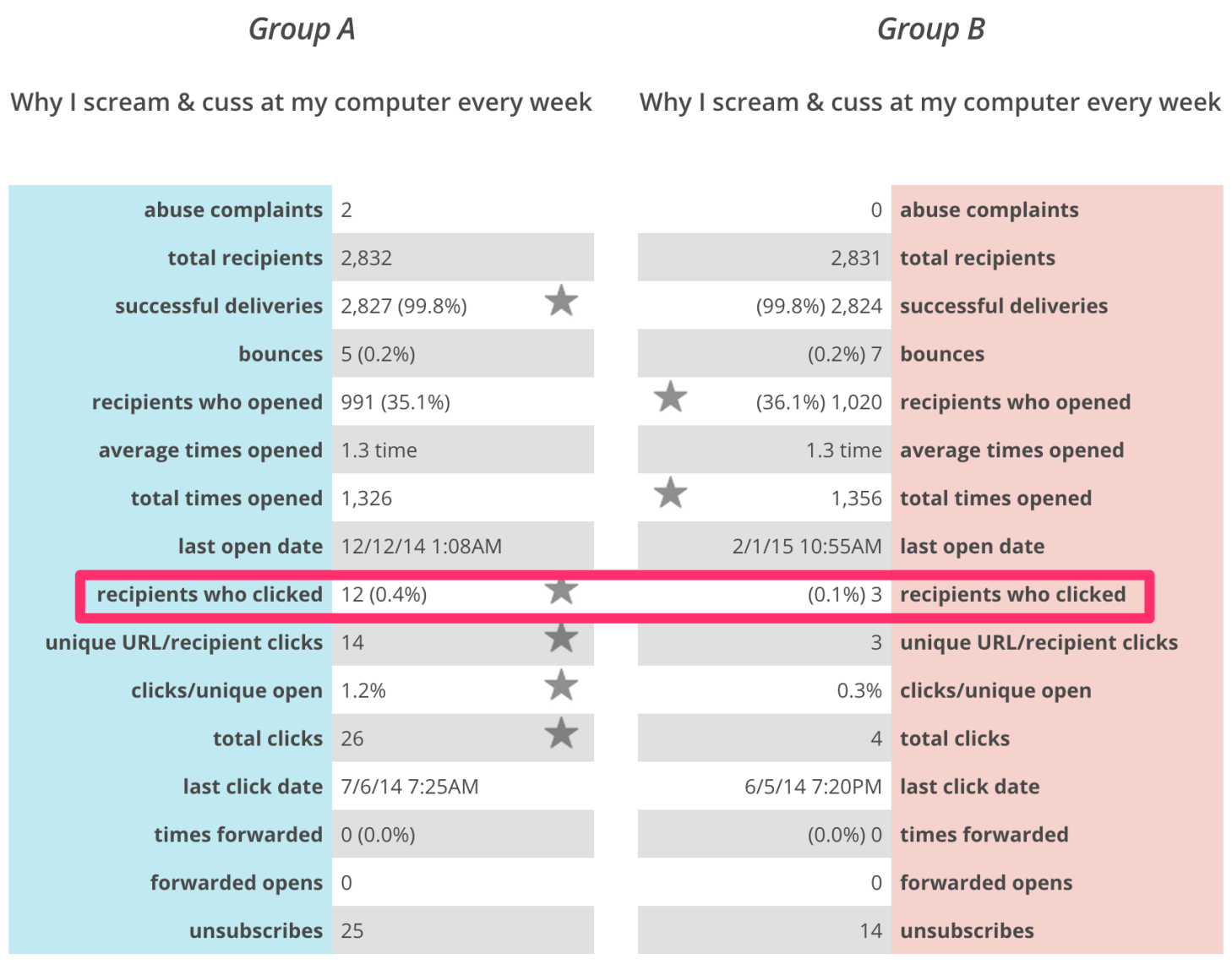

Running an A/A test for every email for 8 months really gave me a feel for how misleading A/B test “results” can be. Check out some of the “results” I got from changing nothing at all.

A 9% increase in opens!

A 20% increase in clicks!

A 51% lower unsubscribe rate!

Finally, an incredible 300% increase in clicks, all by simply doing absolutely nothing!

Of course, to an experienced eye, it’s clear that none of these “tests” have a large enough sample size (when taking to account the baseline conversion rate) to be significant.

To a wantrepreneur’s eye, however, they’ve just validated (or invalidated) their hypothesis. They may give up their entire vision based upon “results” like these.

You can see the “results” of all 56 of these email campaigns compiled in this handy spreadsheet.

Is it significant? Does it f*cking matter!?

I spent hours pouring over articles, learning just how to run a reliable, significant A/B test, and I came to this conclusion: It Doesn’t F*cking Matter.

To me, it doesn’t matter, and to most budding businesses, it doesn’t matter.

Here’s why IDFM.

Reason 1 IDFM: A/B testing (correctly) takes tremendous energy

To run a test that asks an important question, that uses a large enough sample size to come to a reliable conclusion, and that can do so amidst a minefield of different ways to be lead astray, takes a lot of resources.

You have to design the test, implement the technology, and come up with the various options. If you’re running a lean organization, there are few cases where this is worth the effort.

Why create a half-assed “A” and a half-assed “B,” when you could just make a full-assed “A?”

As a bootstrapped solopreneur, I realized that every unit of mind energy I used to run an A/B test could have been put towards making one option that was a more lucid interpretation of my vision.

Reason 2 IDFM: A/B testing is no substitute for vision

In Zero to One, Peter Theil warns against “incrementalism,” or just working to improve what’s already out there. Our world needs entrepreneurs with vision, and if they’re busy second-guessing and testing everything (and often making the incorrect decisions based upon these tests), that’s a sad thing for humanity.

Even Eric Ries, one of the forefathers of the Lean Startup movement that has spawned the cult of A/B testing implicitly warns against taking testing too seriously in his book, The Lean Startup:

We cannot afford to have our success breed a new pseudoscience around pivots, MVPs, and the like. This was the fate of scientific management, and in the end, I believe, that set back its cause by decades. Science came to stand for the victory of routine work over creative work, mechanization over humanity, and plans over agility. Later movements had to be spawned to correct those deficiencies.

–Eric Ries, The Lean Startup

Many wantrepreneurs want to use A/B testing as a substitute for having entrepreneurial vision. There’s no doubt that the concepts introduced in The Lean Startup are powerful, but some take it too far, or just misinterpret those ideas.

It’s sad, really. It makes you wonder how many great ideas have been (in)”validated” into extinction.

I do have a strong vision for my company which is rooted on a tightly-knit point-of-view of How Our World Works. I’m more interested in using my energy to hone that viewpoint (by learning about history, economic and technological trends, etc.), and executing than I am in second-guessing what words I use in a call-to-action button.

Reason 3 IDFM: Statistics isn’t your core competency

Unfortunately, the web is packed with misinformation about A/B testing, usually perpetuated either by

- Wantrepreneurs who don’t know how to run a reliable test OR

- People or companies that have something to gain from making people think A/B testing is the answer to all of their problems (they sell testing tools, are after page views, etc.. It’s not a vast conspiracy, it’s just how capitalism works).

However, I did come across a few good resources that really explained the complexities of running a reliable test.

What I realized was

- If I really want to run a reliable A/B test, the information is out there.

- It’s damn complicated.

- It wouldn’t come naturally to me.

It’s not that I’m bad at math. It’s that I’m not that good at math.

Unless you’re someone who is properly trained, and really understands statistics, you should be wary of running tests. Even then, remember that “to someone with a hammer, everything looks like a nail.”

Reason 4 IDFM: You aren’t Google

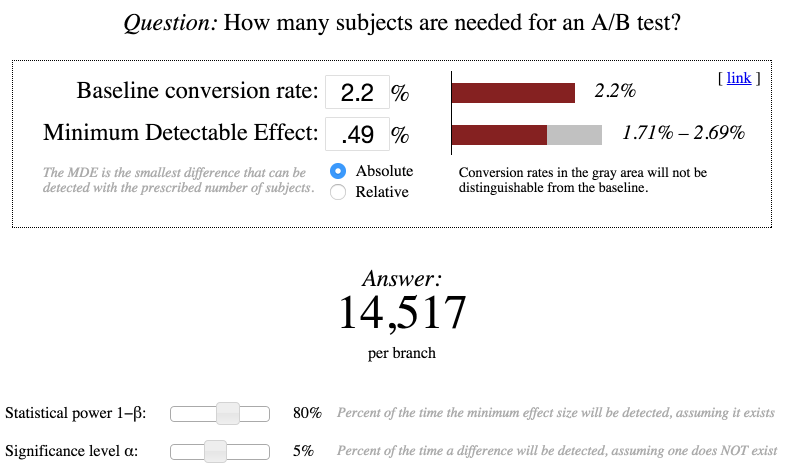

At close to 30,000 subscribers, I have a decent-sized email list for a solopreneur. But, what can I test with that? Turns out, not much.

Using Evan Miller’s awesome sample size calculator, let’s see what kind of sample sizes I really need.

If I want to test the click-through rate on two different emails, my baseline rate would be about 2.2%. That’s about the CTR I can expect if I’m actually trying to get clicks in an email.

It turns out, with a list about my size (14,517 per branch = 29,034), I could begin to detect a difference with a change of about .49%, or a 22% increase or decrease in clicks.

That’s a big difference, and that’s when I would start to “know” I had a winner. The caveat to that being that only 80% of the time (statistical power = 80%) will the difference actually be detected, and 5% of the time (significance level = 5%), I’ll be told there’s a difference when there actually is no difference.

To make a solid ROI even more unlikely, a click in an email doesn’t necessarily put money in my pocket. Different types of prospects behave differently.

For example, a long email may generate fewer clicks, but the clicks that it does generate will be prospects that are more interested, warmer, and more likely to convert.

Using historical data, if I want to find a significant lift in buys from a single email, I’m going to need to make a “B” that converts 70% more customers than option “A.”

I’m not Google. With the sample sizes I can put together, I can spend my energy either trying to master the Jedi mind trick, or using best practices to concentrate on making my products and offerings more enticing.

Should you test? It depends

There’s no denying the power of the scientific method. When applied correctly, it can be invaluable in guiding an entrepreneur when important questions about a business arise.

Here’s a few questions to ask yourself to decide whether you should run an A/B test:

- Do I have an important question? Will answering this question make an impact worth the effort of running a test?

- What else could I be doing with my energy? Running experiments and being creative and visionary are two completely different brain modes. Are you distracting yourself from making valuable insights?

- Can I run a large enough test? Plug your baseline conversion rate and “minimum detectable effect” this sample size calculator to see if you have a chance at even reaching statistical significance.

- Do I really understand testing? Try running A/A tests to get a feel for how misleading “results” can be. If you still feel testing is worth it, read and understand some of the resources below to learn how to run a reliable test.

Also, keep in mind there are other ways for businesses to “test.” I really like the lets-try-this-and-see-how-it-goes-then-iterate test.

So, Einstein, how is that you run a good test?

The answer is: I don’t really know. In fact, it’s likely that I butchered some of the above terminologies and methodologies (which is the point, really).

There really are people out there trying hard to put forth accurate information about A/B testing, but some people just don’t seem to read it. Here are some resources that I found credible and helpful:

- How data will make you do totally the wrong thing. If you only consult one of these resources, this should be the one. The straight dope on statistics and testing by a successful entrepreneur, and trained statistician (WP Engine founder, Jason Cohen).

- How not to run an A/B test. Why you need to determine your sample size before you start testing, and why your A/B testing platform may be exponentiating your rate of false positives.

- The low base rate problem. How could my above results have seemed significant, but not been? Statistical power. Learn about it, and how it makes most tests with low “base rates” useless. I love this quote: “anyone who lacks a firm understanding of statistical power should not be designing or interpreting A/B tests.”

- 12 A/B split testing mistakes I see businesses make all of the time. Friend and conversion expert, Peep Laja has lots of solid content on testing on his blog. This is a good introduction.

It’s okay not to test

Running reliable tests that will give you definitive answers is hard.

Meanwhile, a 300% increase on a conversion rate of 0% is still 0%. Ship the damn product.

Your energy is likely better used elsewhere, and you can start testing when it actually DFM. It’s okay to not want to be a statistics expert.

In the meantime, try running a few A/A tests. You’ll be amazed at the “results.”

A/A Testing: How I increased conversions 300% by doing absolutely nothing http://t.co/PGN30rUkD5 (New blog post) pic.twitter.com/R27UraX3Fb

— ? David Kadavy (@kadavy) February 12, 2015